SpaceFind w/ HoloLens 2

Augmented reality research and design centered around AR telepresence and mutual space finding with Hololens 2. (In Progress)

Overview

XR telepresence refers a set of technologies which allow a person to feel as if they were present, to give the appearance of being present, or to have an effect at a place other than their true location. As AR technologies are improving, telepresence becomes more and more of a priority as it sets the foundation for a variety of applications; telepresence will enable users to call remotely with holographic avatars in the same room together, allow children to play in-person games virtually, along with countless other use-cases.

One of the largest obstacles in a working telepresence system is mutual space finding; when users are located in different rooms that all have separate arrangements, it can be difficult trying to calculate the physical space in which all users are able to freely share. This is where Mohammad Keshavarzi’s previously designed system comes into play; published at IEEE VR 2020, their paper and algorithm calculates the square footage and dimensions of the rooms of HoloLens users and projects the ideal mutual space that they all share. The algorithm also suggests different room layouts and corresponding directions to move furniture in order to optimize the mutual space for the users.

I joined their team as a designer and researcher to help them create a better interface for their application and to improve the user experience of the system itself. As this is still unpublished research, I cannot share video demos or specific details of the project on this page - please inquire for more details.

Role & Duration

AR Designer

User Research, Hololens 2 AR Design, User-Testing, Cinema4D, Adobe After Effects, Figma, Unity3D

Teammate: Woojin Ko

Advisors: Prof. Luisa Caldas, Mohammad Keshavarzi

November 2020 - Present

Mutual Space Interface

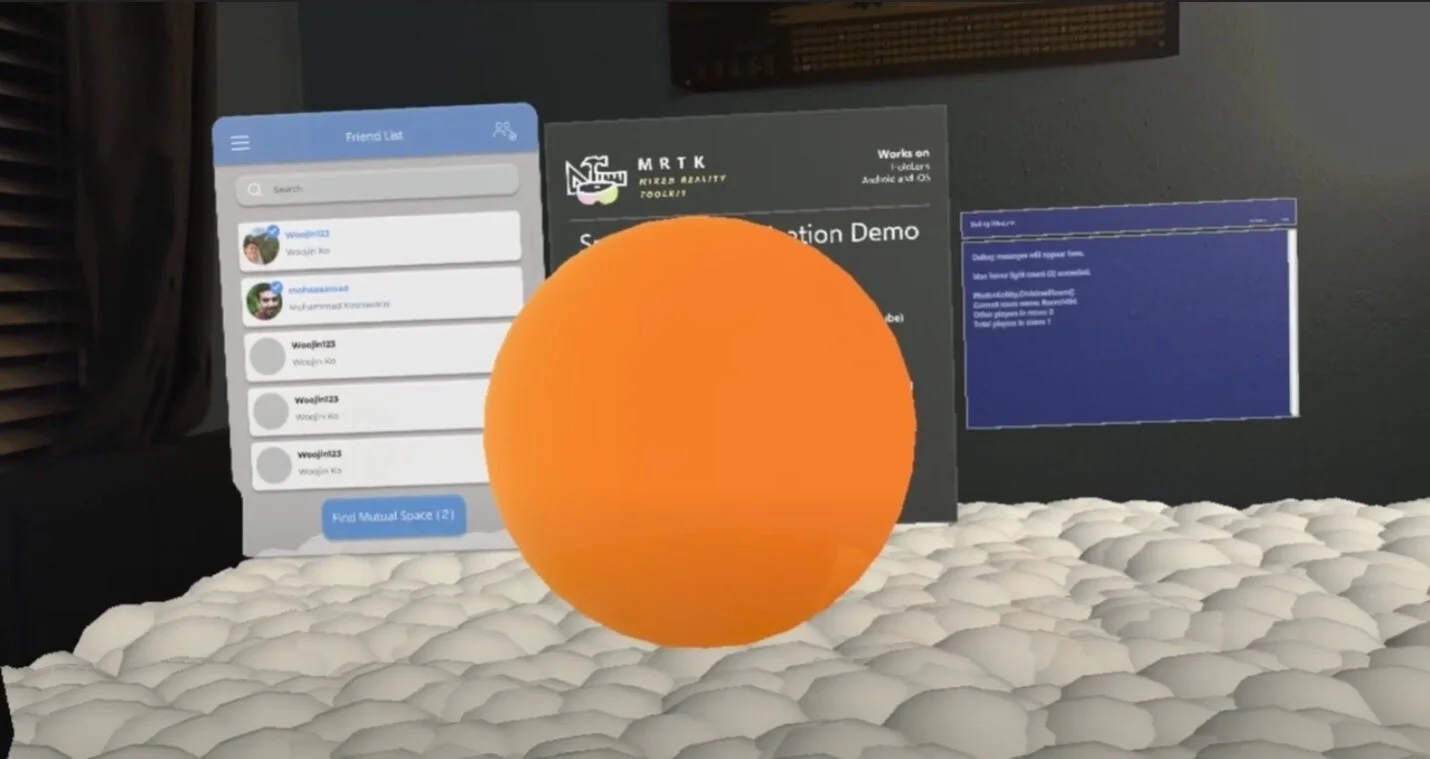

In order to design an interface to illustrate shared mutual space between Hololens users, I took inspiration from 3rd-person games and the Guardian from Oculus to render an “orb field” which would depress depending on the location of the virtual user. This would allow users to see the geolocation of others while also distinguishing between one another. Currently, there are some technical obstacles regarding the implementation of this design and we are using a more static placeholder until it is resolved.

Furniture Movement Interface

By utilizing different colored arrows and boxes, the furniture movement interface is able to illustrate to users which furniture to move and in what direction. The user interface that pops up also depicts a visualization of the current room along with a birds-eye view of the same directions that are over-layed onto the furniture itself. Alongside the room visualization, there are a few metrics to help aid the user including square footage of the shared mutual space, amount of effort required to move each piece of furniture and whether or not the mutual space is a continuous environment.

User Interface

The introductory UI of the experience is a menu which allows the user to select which of his friends he would like to share a mutual space with; once selected, the algorithm will calculate the mutual space and display it on the hololens.

Future Direction

This was my first time working with a head-mounted AR device, the HoloLens 2, and I have learned a lot already. There are many more factors to consider when designing for the HoloLens including opacity of user interfaces, hand gestures for interaction, and also texturing 3D assets. I also learned a lot about the workflow between 3D applications and Unity including exporting assets and animations, creating technically feasible designs, and working with a game engine in general. There is still a lot more work to be done including fleshing out the user interfaces to complete the user flow; I would also like to explore how to design better assets to make the experience more enjoyable and also more convenient for the user. Through user-testing, I hope we can gather useful insights so that I can iterate on my designs and continue working on this project. We are aiming for a conference publication later this year with a demo video illustrating a sample user flow through the entire application.